The launch of ChatGPT (GPT-3.5) in November 2022 was a landmark moment for artificial intelligence – OpenAI pulled powerful language models out of research labs and gave the average person access to realistic and human-like AI conversation.

Just 3 years later, and the current version of ChatGPT is much more intuitive and faster than its predecessors, makes fewer errors (or hallucinations), and can produce images and voice. Also, pretty much everyone uses it. For everything.

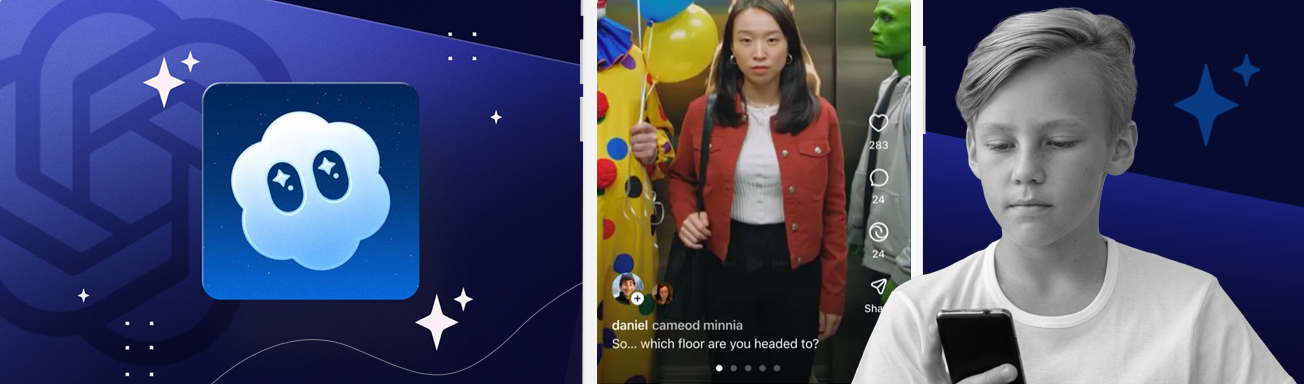

OpenAI believes the launch of Sora 2 is the “GPT-3.5 moment” for AI video creation, allowing anyone to produce realistic AI videos from simple text prompts.

What is Sora 2, and what can you do with it?

Sora 2 is an AI video generation app created by OpenAI (the AI research company behind ChatGPT) that allows users to produce short videos from a text prompt.

This version represents a huge leap forward from the original Sora model, which produced videos nearly everyone could recognize as fake – teleporting objects, warped motion, inconsistent body movement, unstable items across shots, to name a few of the commonly seen flaws. Sora 2, however, can generate much more realistic movements, more stable worlds, and, unlike the video-only Sora, adds dialogue, sound effects, and audio that match the scene.

On top of the realism upgrades, Sora 2 introduces “Cameos,” a feature that allows users to upload their face and voice to appear in AI-generated videos, and it adds a TikTok/Instagram-style feed of AI-generated content.

At the time of writing, Sora 2 is available as an iOS and Android app, and on desktop, in a limited number of countries.

![2025-12 [Blog] Is Sora2 safe for kids_Inside Image Worried teenage girl using smartphone](https://static.qustodio.com/public-site/uploads/2025/11/27135705/2025-12-Blog-Is-Sora2-safe-for-kids_Inside-Image.png)

Sora 2: the risks parents need to know

Inappropriate or disturbing content

It might be AI, but videos featuring dangerous stunts and violence can look very real and appeal to young users. Such content is often presented without warnings, with upbeat music, and framed in a cheerful, carefree way – potentially desensitizing teens to serious and violent themes.

Minimal parental controls

At the time of writing, the closest thing to parental controls that Sora offers is the settings available for connected teen accounts on ChatGPT. A parent can de-personalize a child’s Sora feed, choose whether their teen can send and receive direct messages, and control whether there is an uninterrupted feed of content while scrolling – and that’s about it.

Parents cannot monitor a teen’s activity on Sora, restrict concerning content, or – perhaps most importantly – prevent a teen from creating and sharing Cameos or stop their likeness from being misused.

Identity manipulation and deepfakes

Sora 2’s “Cameos” feature allows users to upload their face and voice so they, and others, can use their likenesses in AI videos. While this can mean hours of fun for teens, Cameos opens a can of worms when it comes to privacy and safety risks.

Once your child has uploaded their likeness, other users could create realistic videos of them in scenarios they haven’t consented to, which can then be downloaded and redistributed on other platforms – potentially leading to cyberbullying and other forms of harassment. For example, a child could create a video featuring a classmate doing something humiliating, which can then be spread throughout the school and beyond.

What’s more, once uploaded to Cameos, a user’s face and voice may be used to train OpenAI’s models and be held in facial recognition databases permanently.

Misinformation

The realism of Sora’s AI videos, their lack of moderation, and the speed at which users can generate them, mean that videos containing misinformation and fake news can spread fast across on Sora and other platforms. Sora’s personalized feed presents many of the same issues as Instagram Reels, TikTok, and YouTube Shorts, including echo chambers – the risk of being repeatedly exposed to the same kinds of harmful ideas with little or no exposure to alternative views.

To inform viewers that videos created by Sora 2 aren’t real, they feature digital watermarks; however, they can be removed by third-party programs.

Sora 2 and teens: Our recommendations

Until OpenAI introduces robust parental control features, we don’t recommend that teens use Sora 2. It’s easy to find content unsuitable for kids – especially videos depicting realistic-looking violence or dangerous situations -and they don’t have warnings. For teens, critical thinking is something that they’re still developing, so they can be more susceptible to believing the misinformation and generally fakery that’s often spread through AI videos.

Our main concern with Sora 2 is the “Cameos” feature. The ability to upload a kid’s face and voice to Sora, and their likeness to be put in videos for others to edit, download, and share, is fraught with potential problems and can open the door to blackmail, cyberbullying, and other forms of harassment.

If you’ve considered these concerns and still want to allow your teen to use Sora 2, here are a few safety recommendations:

- Connect your ChatGPT accounts. By pairing an adult account with a child account, parents can make use of Sora’s, somewhat limited, parental controls.

- Encourage critical thinking. Watch some videos together and see if you can spot giveaways that the video is AI-generated. Talk about why it’s important to identify and challenge misinformation.

- Set rules for Cameos. As it’s arguably the most dangerous aspect of Sora 2, Cameos should be front and center of any discussion. Whether or not you ban Cameos altogether, this is a good opportunity to remind kids (and anyone else) of the risks of uploading pictures of themselves, and that they should never upload pictures of others without their express permission.

- Ensure Cameo sharing controls are set to “Only me” or “People I approve.” These are default settings for teen accounts, but remind your teen that limiting access to approved friends doesn’t prevent those friends from downloading and redistributing their likeness across other platforms or DMs.

- Stay informed. Follow announcements from OpenAI to stay in the loop about new features and updated parental controls.

- Use external parental control tools. An all-in-one parental control solution like Qustodio allows you to set limits on how much they use Sora 2 and get an alert when they open the app for the first time. If you decide Sora 2 is too unsafe for your child, you can block the app from being opened.

Sora 2 is a major leap forward in AI video generation, allowing users to create realistic, short videos, with audio, from simple text prompts – but unfortunately, its parental controls have not kept up with the advancement. This lack of safety features, combined with ‘Cameos’ and the disturbing, violent, and misleading content found on its social-media-like feed, means we cannot recommend Sora 2 for kids at this time.